Stop doing stupid stuff with your user tests!

Hi there internet stranger, sorry about the clickbaity title, but we have to talk about this. Bad practices with something close to my heart pains me tremendously to see.

User testing and eye tracking are very useful tools to aid in the optimization of a website. And like tools, they can be used differently for different problems, but they are also totally useless for certain jobs. A hammer was great to have on hand mending my tree-house as a kid, but it was significantly less useful when it came to mending dads window after I had hit a ball through it.

The big misunderstanding with tests and number of users.

Innumerable times I have heard people referencing the now-famous Nielsen Norman and Tom Landauer study while at the same time missing the point of what they are getting at.

With that number of users, you are dealing with a result that has to be analysed qualitatively and not quantitatively, and despite this, both people conducting tests and seeing the results are constantly drawing the wrong (quantitative) conclusions.

Qualitative: Data often used for explorative research, discovering behaviours and the reason behind them using smaller sample sizes.

Quantitative: Is aggregate data from larger samples, used to show how often something happens. For example the percentage of the user that abandon their visits in the checkout.

How should we be handling data from tests with a small number of users?

User test with a small group of people is a great, resource-efficient way to test the waters. They can be used as an iterative part of a design process, as a part of ongoing optimization to provide an insight into possible behaviour or to catch usability problems that have slipped through the cracks. So there is nothing wrong with testing with only 5 users, in fact, it’s a really good thing to do in many situations, but don’t interpret the results as indications of how often something happens

But, but, but if 4 out of 5 did a certain thing it must surely mean something

The moment someone tries to infer that something is a frequent behaviour based on how many of the small numbers of test users that did something, they are overstepping the boundary of their chosen research method.

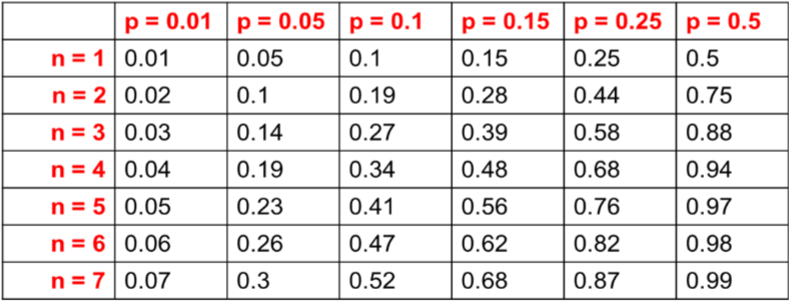

The usual answer I get is “But, but, but if 4 out of 5 did a certain thing it must surely mean something”. And it’s true that it will slightly raise the chance that the behaviour is frequent, but the group is so small that it will still vary greatly. Try rolling a dice 5 times and using the resulting data set to establish how frequently it lands on a different side… not a particularity good method right? And since we as humans are bad at handling uncertainty and small sample sizes, people tend to interpret 4 out of 5 statements as if a certain behaviour is a norm rather than “it’s a little bit likely that this behaviour is the norm” which would be the case with a small sample.

When I have come this far discussing the subject one-two questions usually pop up.

If I can’t say how often something happens user testing is pointless!

or

But this does not apply to us we test 8 or 10 or whatever number of users!

Let’s address these in turn.

If I can’t say how often something happens user testing is pointless!

Sure we can’t extrapolate the frequency of behaviour for a small group of test users to be representative for the entire population of visitors. But nothing prevents us from reasoning around the finding and its implications as well as cross-checking in other sources with quantitative data if that is necessary to help us come to a decision.

Scenario:

If we ran a user test and we had some users getting the input of a required form field wrong (say it only accepts one type of formatting out of several possibilities for social security numbers, and it provides no help or instructions for the user). It’s a good finding even if we don’t know how frequent this behaviour is with visitors. It’s definitely something that should be dealt with since it can lead to frustration for users and possibly even prevent them from accomplishing the task they are looking to perform. Since any reasonable fix would be without major possible downsides for the user experience we can just add it to our todo list of improvements from the test.

Quick fix:

To fix the issue we start of by adding some helping text to field to assist user, but we want low friction form so ideally, we want to change the logic behind the field to accept any viable input and then transform it to the right format for our back end database, but coding resources are tight so we want to make sure this change would impact loads of people.

Deep dive:

We know it’s in a required field so it has the potential to impact many users but we don’t know how many users that prefer an “incorrect” input format.

We could go out and search for general statistics for inputs of social security numbers or better still we might have it in our own quantitative site data say though error event tracking in google analytics.

Now that we know what we are looking for we manage to find it Google Analytics (or realize gaps in our tracking). We are ready to prioritize it in our dev backlog. Findings from a user test can span from straight just do it simply because it’s something that obviously should be fixed, to findings that turn in to a hypothesis that ends up being validated through an A/B test.

Why an A/B test? Simply because there were both up and downsides related to the actions the finding pointed us towards and we needed to know the impact of those different aspects against each other for our audience in large.

In either case, the user test was not pointless, it brought something to our attention and gave us further understanding of it and its possible causes. Depending on what we find we just proceed in different ways.

“But this does not apply to us, we test 8 or 10 or whatever number of users!”

You have to go up to a quite large amount of users and get very definitive results to start drawing quantitative statistically valid conclusions. It is possible for bigger budget projects but it will take away more than 10 users, and if you are going down this route that you should really make sure you are not wasting resources getting findings from user testing that would have been better gathered by another methodology.

This being said, we test with different amount of users all the time. However rarely because we are aiming for a number of users where we can start aggregating and handling data quantitatively.

So why are we varying the number of users then? To increase the probability of seeing different types of behaviour. Is a large previously untested feature about to go online and you have one test to find and fix issues before it happens? Then you want to be sure the risk of missing important findings is low, so you better amp up the number of test users. Maybe you are following a better model for developing and releasing features…

Say you are doing iterative testing from early on in the project, in that case, 5 users is a sufficient amount per test because you have plenty of chances to catch things later on as well. Finally, if your test aspires to find examples of infrequent behaviour the number of test person should increase if you want a reasonable chance to encounter those examples.

So it’s good to vary the number of users based on the research question, but if you want to start answering quantitative questions from your test make sure there are no other methods available to serve your needs better.

Conclusion

If all you have is a hammer, everything looks like nails. User testing with a small number off users is a great and efficient way to get a better understanding of how users can interact with your page. But don’t try to use it for all questions, if you have better tools available for the job.

The internet is great for user research! We have a multitude of possible data sources available to us, and it’s easier than ever to get good quantitative data. Therefore user testing is seldom the right tool for frequency questions, even if people possessing only the user testing tool might think it’s great to say 4 out of 5 did something.

The moment you hear those type of numbers in a presentation, or even consider saying it yourself, stop and consider the real sample size: Was is 80 out of 100, 40 out of 50 or simply 4 out of 5. Because chances are you are its the wrong answer to the wrong question, instead of focusing on what happened and why it happened. Why is where that strength of user testing really lies, how often should often be in your web analytics data.